MF-RSVLM

FUSE-RSVLM: Feature Fusion Vision-Language Model for Remote Sensing

Project Page | Paper | Model | Dataset

If this project helps you, please give us a star on GitHub.

Overview

MF-RSVLM is a remote sensing vision-language model (VLM). It combines a CLIP vision encoder, a two-layer MLP projector, and a Vicuna-7B LLM, and is trained in two stages for modality alignment and instruction following.

- Visual Encoder: CLIP ViT-L/14 336px

- Projector: 2-layer MLP

- LLM: Vicuna-7B v1.5

- Training: Pretrain (VersaD 1.4M image-text pairs) + SFT (instruction tuning)

Contents

Install

git clone [email protected]:opendatalab/MF-RSVLM.git

cd MF-RSVLM

conda create -n mf-rsvlm

conda activate mf-rsvlm

pip install -r requirements.txt

Repository Layout

MF-RSVLM/

├── mfrsvlm/ # package code

│ ├── model/ # deepstack, builder, consolidate

│ ├── train/ # train_mem.py, train.py, trainer

│ ├── conversation.py

│ ├── constants.py

│ ├── mm_utils.py

│ └── utils.py

├── scripts/ # inference/eval/data-prep helpers + ZeRO configs

│ └── data/

├── checkpoints/ # mf-rsvlm-7b_pretrained, mf-rsvlm-7b_sft

├── models/ # vicuna-7b-v1.5, clip-vit-large-patch14-336, llava-mlp2x

├── requirements.txt

└── README.md

Downloads

Models

| Name | Link | Description |

|---|---|---|

| MF-RSVLM Pretrain | https://huggingface.co/FelixKAI/mf_rsvlm_7b_pretrained | Pretrain stage |

| MF-RSVLM SFT | https://huggingface.co/FelixKAI/mfrsvlm-7b_sft | SFT stage |

| CLIP Pretrain | https://huggingface.co/openai/clip-vit-large-patch14-336 | Pretraining stage vision tower |

| Vicuna-7B | https://huggingface.co/lmsys/vicuna-7b-v1.5 | Pretraining stage Language tower |

| LLaVA-1.5 MLP Projector | https://huggingface.co/liuhaotian/llava-v1.5-mlp2x-336px-pretrain-vicuna-7b-v1.5/tree/main | MLP projector weights |

Datasets

- Pretrain data: https://huggingface.co/datasets/FitzPC/VHM_VersaD

- SFT data: https://huggingface.co/datasets/FelixKAI/RSVLM-SFT

Training

MF-RSVLM training has two stages: pretraining for modality alignment, and supervised fine-tuning (SFT) for instruction following.

Pretrain

Run the Slurm script below to start pretraining:

sh scripts/rs/slurm_pretrain.sh

Supervised Fine-Tuning

Run the Slurm script below to start SFT:

sh scripts/rs/slurm_finetune.sh

Inference Demos

Single-Sample Inference (CLI)

Use the lightweight helper to test a single image-question pair. This script loads the model once and prints the response directly in the terminal.

CUDA_VISIBLE_DEVICES=0 python scripts/run_mfrsvlm_inference.py \

--model-path checkpoints/mfrsvlm-7b_sft \

--image-path /path/to/image.png \

--prompt "What is shown in the image?"

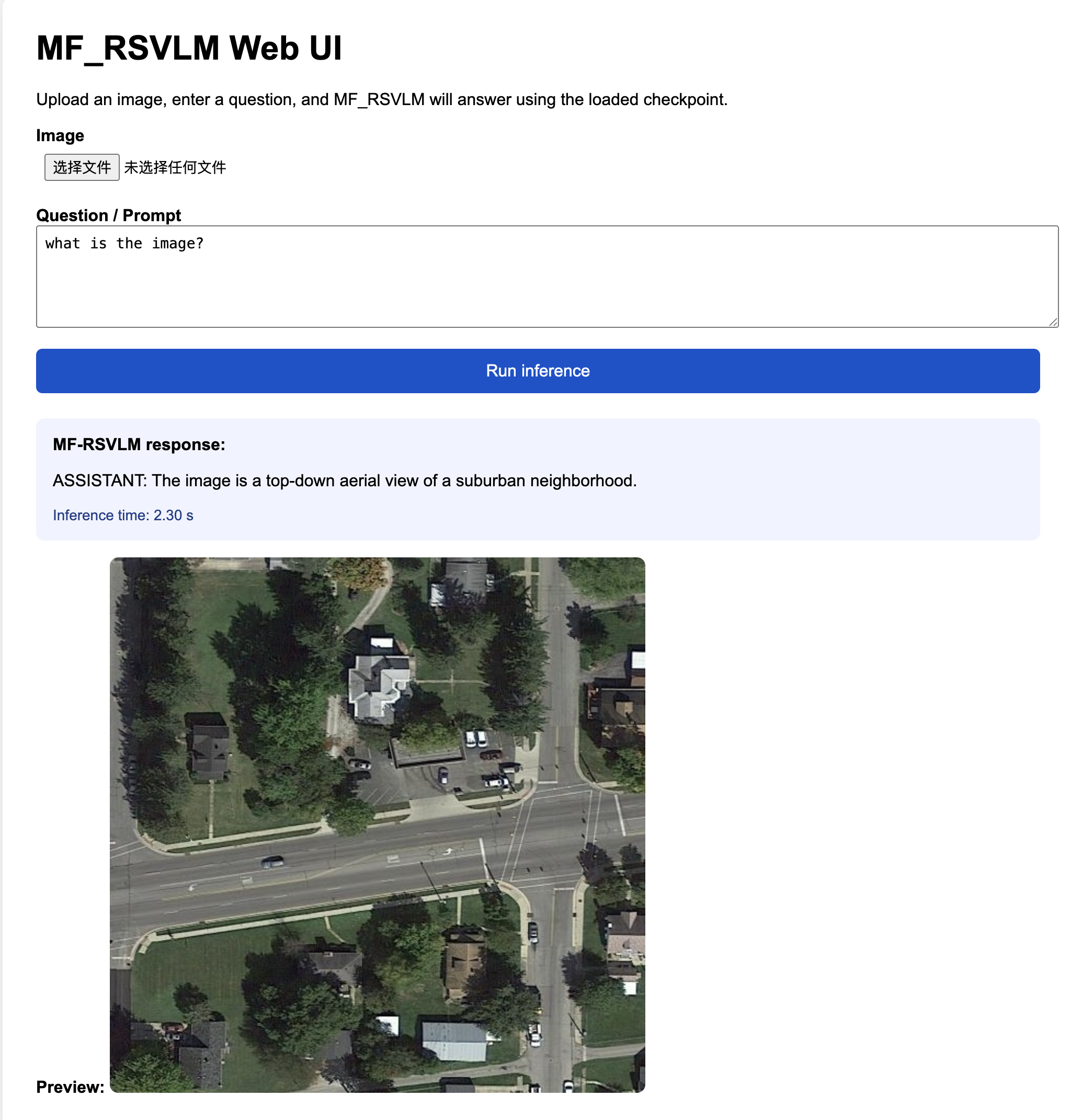

Web Demo (Full-Model UI)

Start a simple Flask web interface for interactive evaluation. The server loads the checkpoint once, then serves a browser UI for repeated queries.

CUDA_VISIBLE_DEVICES=0 python scripts/run_mf-rsvlm_web_server.py \

--model-path checkpoints/mfrsvlm-7b_sft \

--host 0.0.0.0 \

--port 7860

Open http://localhost:7860 in your browser, upload an image, and enter a question to get the model response.

Evaluation

We provide a dedicated evaluation toolkit: RSEvalKit.

git clone https://github.com/fitzpchao/RSEvalKit

cd RSEvalKit

conda create -n rseval

conda activate rseval

pip install -r requirements.txt

Download the model weights and datasets, then follow the RSEvalKit README for one-click evaluation.

Citation

@article{dang2025fuse,

title={FUSE-RSVLM: Feature Fusion Vision-Language Model for Remote Sensing},

author={Dang, Yunkai and Wang, Donghao and Yang, Jiacheng and Jiang, Yifan and Zhu, Meiyi and Yang, Yuekun and Wang, Cong and Fan, Qi and Li, Wenbin and Gao, Yang},

journal={arXiv preprint arXiv:2512.24022},

year={2025}

}

Acknowledgement

We gratefully acknowledge these wonderful works:

- Downloads last month

- 7